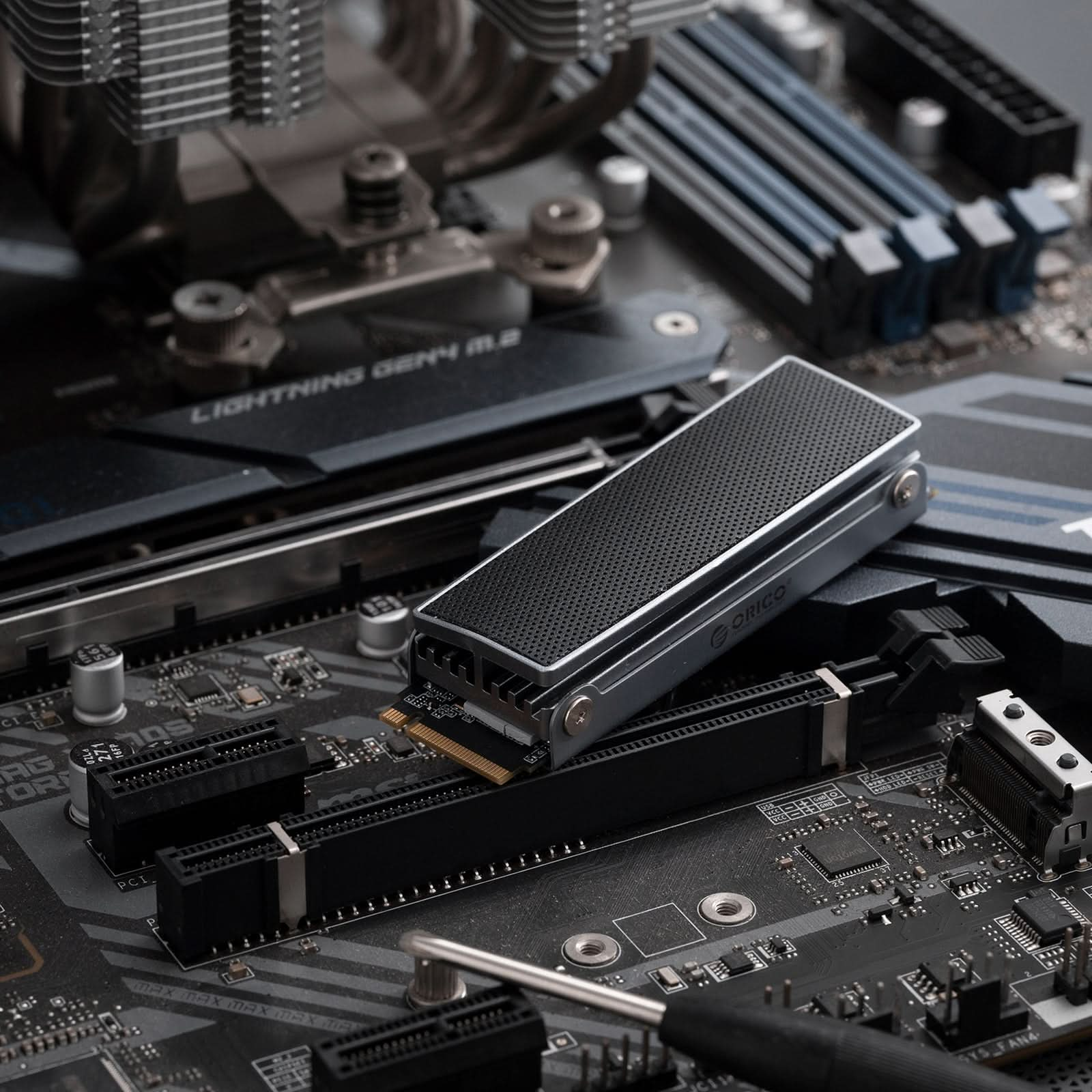

ORICO OSC PCIe 4.0 NVMe SSD with Built-in Heatsink

Commitments

- · Global Shipping

- · 30-Day Money Back Guarantee

- · Lifetime Customer Support

Shipping

· Free Shipping over $39.99

· Estimated Delivery Time: 5-14 Business Days

· Custom Fee & VAT are covered to US/EU/UK/CA/AU/SG, etc.

Payment Methods

Description

Specifications

| Model |

OSC

|

| Form Factor | M.2 2280 |

| Interface | PCIe Gen4 x4 |

| Protocol | NVMe 2.0 |

| Read Speed | Up to 7450 MB/s |

| Write Speed | Up to 6600 MB/s |

| Random Read (IOPS): |

1TB: 1128K / 2TB: 1000K / 4TB: 987K |

| Random Write (IOPS): |

1TB: 902K / 2TB: 900K / 4TB: 860K |

| MTBF | ≥ 1.5 million hours |

| Endurance (TBW): |

1TB: 600TBW / 2TB: 1200TBW / 4TB: 2400TBW |

| Power Supply | DC 3.3V ±5% |

| Operating Temperature | 0°C to 70°C |

| Storage Temperature | -40°C to 85°C |

| Shock Resistance | 1500G / 0.5ms / Half Sine Wave |

| Model |

OSC

|

| Form Factor | M.2 2280 |

| Interface | PCIe Gen4 x4 |

| Protocol | NVMe 2.0 |

| Read Speed | Up to 7450 MB/s |

| Write Speed | Up to 6600 MB/s |

| Random Read (IOPS): |

1TB: 1128K / 2TB: 1000K / 4TB: 987K |

| Random Write (IOPS): |

1TB: 902K / 2TB: 900K / 4TB: 860K |

| MTBF | ≥ 1.5 million hours |

| Endurance (TBW): |

1TB: 600TBW / 2TB: 1200TBW / 4TB: 2400TBW |

| Power Supply | DC 3.3V ±5% |

| Operating Temperature | 0°C to 70°C |

| Storage Temperature | -40°C to 85°C |

| Shock Resistance | 1500G / 0.5ms / Half Sine Wave |

Buy Direct from ORICO Store

Free Shipping

All orders expect large weight products over $39.99 automatically qualify for free shipping. Learn More

30-Day Return Policy

We offer a 30-day, hassle-free return policy, so you can shop with confidence. Lean more

Expert Support

Our dedicated team of tech pros is always ready to assist you. Contact us

Custom Fees& VAT Included

Custom Fee & VAT are covered to US/EU/UK/CA/AU/, etc.